AI, chatbots and ChatGPT: Threat to knowledge work, or a ‘dancing bear’?

20 June 2023 | Story Helen Swingler. Photo iStock. Read time 5 min.

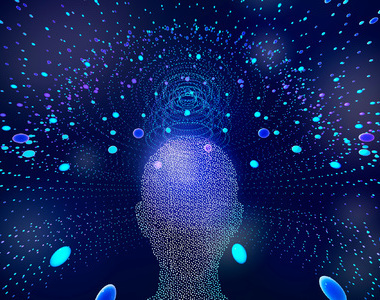

Developments in artificial intelligence (AI)-driven platforms such as Open AI’s ChatGPT needs a cogent response from universities as students turn to this technology to produce their academic outputs – sidestepping critical thinking and research.

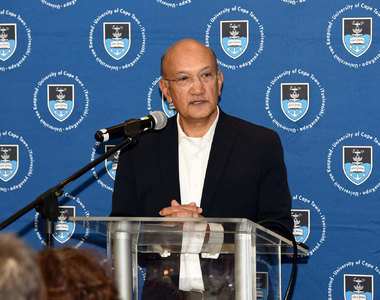

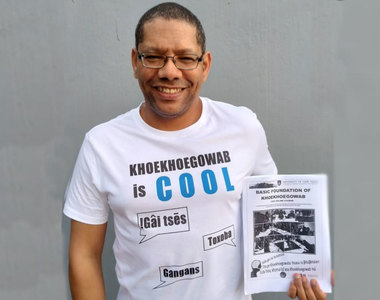

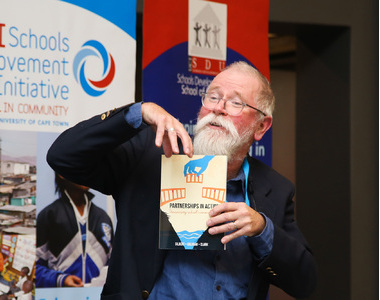

The issue was one of many on the topic of AI addressed by University of Cape Town (UCT) 1972 alumnus Professor Dan Remenyi in his Summer School Extension Series webinar titled “Branded dangerous – AI and the chatbots”.

Professor Remenyi also asked his audience to think carefully about where this technology is taking society.

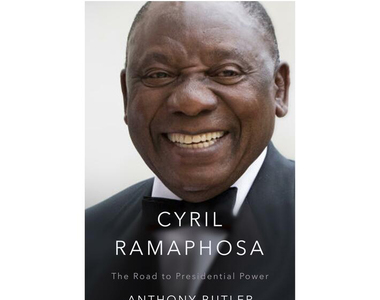

Now living in the United Kingdom, he has been part of the information and communications technology (ICT) industry for several decades and has worked with companies, consultants and leading research universities. The author of several books on ICT, Remenyi is currently an honorary professor in two schools of computing.

While possibilities linked to AI are remarkable, caution is needed, he said.

“Some liken chatbot technology to a dancing bear.”

From an academic perspective, current AI technology fails at essential basics such as generating accurate, original, unbiased, non-repetitive, and academically robust output, backed up by plausible references.

“Some liken chatbot technology to a dancing bear, in that the wonder is not that this technology performs well but that it performs at all. But it is new technology, and it will get better,” said Remenyi.

But are universities actively debating and understanding the effects and limitations of AI and chatbots – and helping their students to do the same? he asked.

On a broader scale, when the potentially dangerous issues emanating from AI chatbots are examined closely, the main threats are security, misinformation and propaganda, data privacy, emotional manipulation, job displacement and loss of control.

“Much of this amounts to the fact that the danger from AI chatbots lies in the potential for malicious actors to use this technology for malevolent purposes,” he added. “At the end of the day, our most powerful way of protecting ourselves is through awareness, education and a healthy attitude of curiosity (and maybe even suspicion).”

Massive investment

“The rate of uptake of this technology has been phenomenal,” he said.

“When OpenAI made ChatGPT available for free at the end of November 2022, it attracted more than a million users in a week.”

Already, the technology has attracting over US$100 billion in investment – and an extraordinary amount of publicity. Commentators have likened the competitive struggle between the tech giants for market share in this sector to an “unseen arms race”.

“There are large sums at stake. The value of the global chatbot market is projected to reach US$10.08 billion by 2026. The amount of money involved is staggering – and unprecedented.”

Existential threat

But the darker side of this arms race involves the larger ethical and social issues: the question of AI’s existential threat to humanity.

“AI chatbots do not yet demonstrate evidence of artificial general intelligence [independent reasoning] but important gurus in the ICT sector believe it is inevitable,” said Remenyi.

With a reference to the most difficult business of providing plausible forecasts by quoting former British Prime Minister Benjamin Disraeli’s words about “lies, damned lies, and statistics”, Remenyi said that current thinking by AI software engineers is that 50% of them believe there is a 20% chance that AI will be responsible for the extinction of humans.

“There is definitely some justification to reflect on what a future populated with AI may hold.”

In fact, he said, Massachusetts Institute of Technology’s (MIT) Information Technology Professor Max Tegmark suggests that there is a 50% chance AI will wipe out humanity.

“Considering this – and the public call in March by more than 1 500 AI specialists to pause further AI developments beyond the launch of ChatGPT-4 – there is definitely some justification to reflect on what a future populated with AI may hold.”

But it’s difficult to know what a moratorium should look like. Neither has there been any articulation of how AI will destroy humanity.

Some commentators also argue that thanks to artificial intelligence alien life, created by humans, that is a living entity not born through natural life processes, will be found in the near future – on Earth.

“This is an allusion to the expectation of some computer experts that an extraordinary configuration of microprocessor technology and ingenious software engineering will allow the creation of an entity that will be fully conscious and independent of any human intervention”.

Although it is essential to never say anything is impossible, this is in Remenyi’s view unlikely in the next few decades.

His perspective is that the more realistic dangers posed by AI is not the “extreme science fiction scenarios”, but those that erode trust, confidence, and ethics.

“The use of AI, even in simple and imperfect applications such as AI chatbots, the ‘dancing bears’ , can be dangerous to society. And it is these other less dramatic and more real types of dangers that we should be concerned with.”

This work is licensed under a Creative Commons Attribution-NoDerivatives 4.0 International License.

This work is licensed under a Creative Commons Attribution-NoDerivatives 4.0 International License.

Please view the republishing articles page for more information.