UCT launches AI guides for staff and students

18 September 2023 | Story Helen Swingler. Photo iStock. Voice Cwenga Koyana. Read time 10 min.

The University of Cape Town’s (UCT) Centre for Innovation in Learning and Teaching (CILT) has launched three guides to help staff and students navigate artificial intelligence (AI) and AI-driven tools such as ChatGPT in their teaching and learning, assessments and other work.

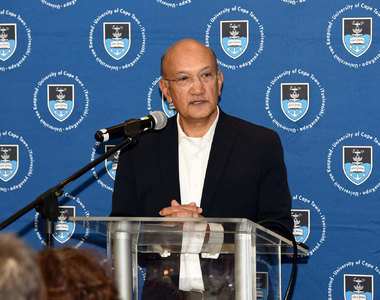

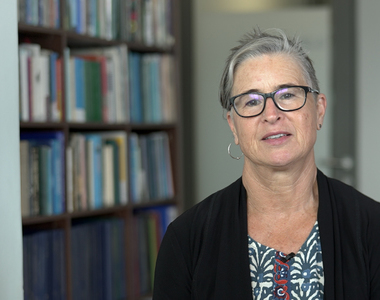

The guides will help the UCT community understand the potential positive and negative impacts of generative AI technologies and their repercussions for higher education, said CILT director, Sukaina Walji.

There are two guides for staff and one for students.

What the guides contain

- The “Staff Guide: Assessment and academic integrity in the age of Artificial Intelligence” addresses the challenge of student assessments in light of the wide accessibility to generative AI tools. It examines various issues and offers practical strategies, approaches and recommended tools to safeguard academic integrity. It also shows staff how to effectively mitigate the potential threats of AI tools to the assessment process.

- The “Staff Guide: Teaching and Learning with AI tools” is a comprehensive guide on the uses of AI tools like ChatGPT in teaching and learning. It explores potential applications, examines ethical concerns and provides additional resources.

- The “Student Guide: Using ChatGPT and other Artificial Intelligence (AI) Tools in Education” provides valuable advice on ethical practices when using this technology in an educational setting. The guide provides useful insights and recommendations on how to approach these tools responsibly while maintaining academic integrity.

The guides were compiled by CILT between May and July this year, after a request by UCT’s AI Tools in Education Working Group. This working group harnesses cross-institutional and faculty perspectives on AI to develop and shape institutional responses to the rapidly evolving technological landscape. The documents will be regularly updated, and guides for researchers will be added.

Risks and opportunities

“The availability and uptake of generative AI tools such as ChatGPT is impacting how university staff and students teach, learn and assess information,” said Walji, who is also the chair of the UCT Online Education Sub-Committee.

While AI tools introduce risks to the learning environment, these also bring many opportunities, she added.

“These tools are exciting in many ways and for many disciplines.”

“It is important that the guides address both students in the context of their field of study, and staff in their field of research or teaching. Both (students and staff) need to develop ways of ethically using these technologies,” said Walji.

“They should also be aware of the constraints and limitations of these – and the potential for innovation and enhancement in teaching and learning. These tools are exciting in many ways and for many disciplines.”

Assessment integrity

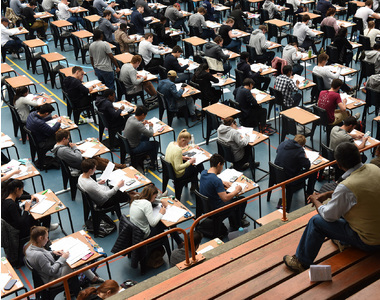

One of the main concerns for academic staff is assessment integrity. How much of what is being produced is AI generated and how much is the student’s work?

“Clearly, some students are using these tools to produce essays, codes, writing assignments, graphics and even videos. It’s going to become increasingly difficult to defend offering existing kinds of assessments going forward, given the availability of these tools.”

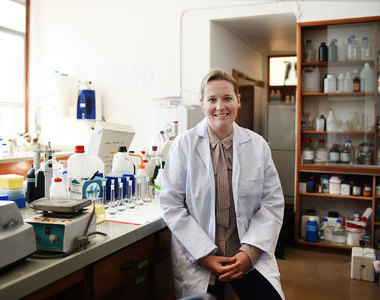

CILT deputy director, Dr Tabisa Mayisela, who worked with a team of colleagues to develop the guides, said there is currently no tool that is accurate enough to definitively detect the use of generative AIs in student assignments.

As a result, some departments are going back to face-to-face invigilated exams, for example, said Walji.

But she cautions against stop-gap measures, which may not be based on sound assessment techniques. CILT’s stance is that this is an opportunity for faculties to relook assessments.

Essays are an example. Meant to develop students’ critical-thinking abilities, generative AI tools like ChatGPT can produce essays for students within minutes on a wide range of topics. In response, some academic staff are giving students permission to use the tool to generate a draft – but asking them to critique it.

“Those are some of the strategies that are developing,” said Walji.

“We’re going to have to grow our understanding through the sharing space – and that’s a rich space in the higher education sector.”

CILT has begun to collect assessment practice case studies to show how staff are changing their assessment methods for the better – and demonstrating how AI tools can be used to their advantage.

Walji also hosts regular panel discussions for the UCT community, looking at assessments and AI trends, identifying pitfalls and valuable tools. Topics have included: “How can we respond to ChatGPT in the classroom: restrict, adapt or adopt?” and “Challenges with AI detection tools: ethical & practical concerns”. Video recordings of these discussions are also available on CILT’s YouTube channel.

At a sectoral level, she is liaising with directors of teaching and learning centres at other South African universities to share resources and experiences.

“We’re going to have to grow our understanding through the sharing space – and that’s a rich space in the higher education sector; a variety of networks, people constantly exploring the space, posting new resources and thoughts on education technology and teaching. We find that very exciting because it stretches opportunities.”

Locking in the mind

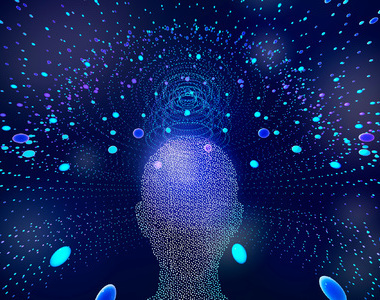

Nonetheless, there are watchpoints staff and students need to be made aware of, particularly concerning the role of human creativity and originality.

“It’s not a database or a knowledge base that you’re querying.”

“We need to be clear about the characteristics and limitations of these tools and not humanise them,” Walji said. “These are effectively statistical models that deploy algorithms on large amounts of data to produce content that is the most plausible or statistically likely.

“It’s not a database; it’s not a search engine. There’s no actual reference so that you can query the provenance of the data. There’s no kind of underlying understanding, consciousness or world-view framing in these tools. It’s not a knowledge base that you’re querying. And this explains many of the mistakes and the anomalies that come up.

“If you use it as a dialogic tool, a kind of chatbot tutor, then that’s something else, because you’ve got a different engagement with it. And we’re still in the learning and experimental phase of better understanding the use cases of these tools.”

Inequality and hegemony

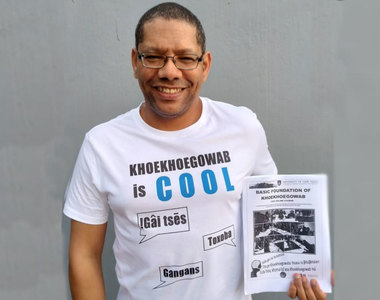

There is also the factor of inequality inherent in what is produced by current generative AI models.

Broadly, AI models such as ChatGPT-3.5 are trained on the massive corpus of text data published on the internet since 2021. This represents mostly the English, Western canon, as well as northern hemisphere perspectives because that tends to be the provenance of the majority of data, said Walji.

“Because it’s based on statistics and frequency in the data, you could end up perpetuating Western thought, rather than freeing the mind.”

Copyright and authorship are also ethical issues.

“If it is produced by ChatGPT, is it you who has copyright or authorship? Or is it the tool? These are all very interesting ethical questions that are now in the university space.”

Paywalls for more developed versions of the tools is another factor influencing equality in the higher education space, noted CILT’s Course Development Manager, Janet Small, who worked on the development of the AI guides.

“We don’t want any more barriers in terms of engagement with tools that make equal participation difficult.”

“The tools we’re discussing most are the free, unlicenced AI tools like ChatGPT-3.5. But increasingly, paid-for versions are emerging because companies are trying to make money. This will become a larger concern in time once the better tools become locked down in licences. This will advantage students who can afford to pay.

“We don’t want any more barriers in terms of engagement with tools that make equal participation difficult.”

Nonetheless, it is important that students develop these critical digital and information literacies.

“Students need to know how to effectively engage with AI tools so that they don’t just accept any information that is generated. They must learn how to engage critically with it; get them thinking about the checks and balances in using AI in a balanced way,” said Walji.

It’s a topic that is particularly relevant to preparing students for the workplace of the future, Small added.

“Our students will be going into a workplace that will be informed, enabled and driven by AI in many cases. Different jobs and disciplines are going to be changed by AI. The onus is on us, UCT, to educate our students about using AI and generative AI, in particular.”

Ideally, students should start learning about the pros and cons of AI and digital literacies from the get-go, from orientation in first year.

“It’s a space to watch,” said Small.

“We’ve started a series of conversations at UCT, which I am sure will gather momentum, because it’s a very changeable space. We need people in this conversation, giving feedback so that it’s a knowledge-building exercise where we’re learning and sharing what we know as a community to find the best possible solutions.”

Mayisela added that this year’s Teaching & Learning Conference has an AI and assessment track, which is currently open for submissions.

Please visit the UCT Teaching and Learning Conference website for details and registration. To get notifications about upcoming events, including the monthly AI panels, subscribe to the CILT events mailing list.

This work is licensed under a Creative Commons Attribution-NoDerivatives 4.0 International License.

This work is licensed under a Creative Commons Attribution-NoDerivatives 4.0 International License.

Please view the republishing articles page for more information.